One day, I was searching for the cheapest possible VPS provider on LowEndBox that was also located in the EU. I finally settled on this beast of a server from RackNerd:

Strasbourg, France

Ubuntu 22.04 64 Bit

1 CPU Core (Included)

1.5 GB RAM

With a server as powerful as this, I needed a minimal OS that could handle running binaries, containers, or perhaps even a full orchestrator.

I settled on Flatcar Container Linux as it seemed interesting and fitting my needs:

- minimal - based on CoreOS (now Fedora CoreOS)

- immutable filesystem, updates happens by loading another full release; you can use systemd-services or systemd-sysext to add your own stuff properly

- configurable before install via Butane/Ignition

- some container support already included

- has pretty good docs

Installation

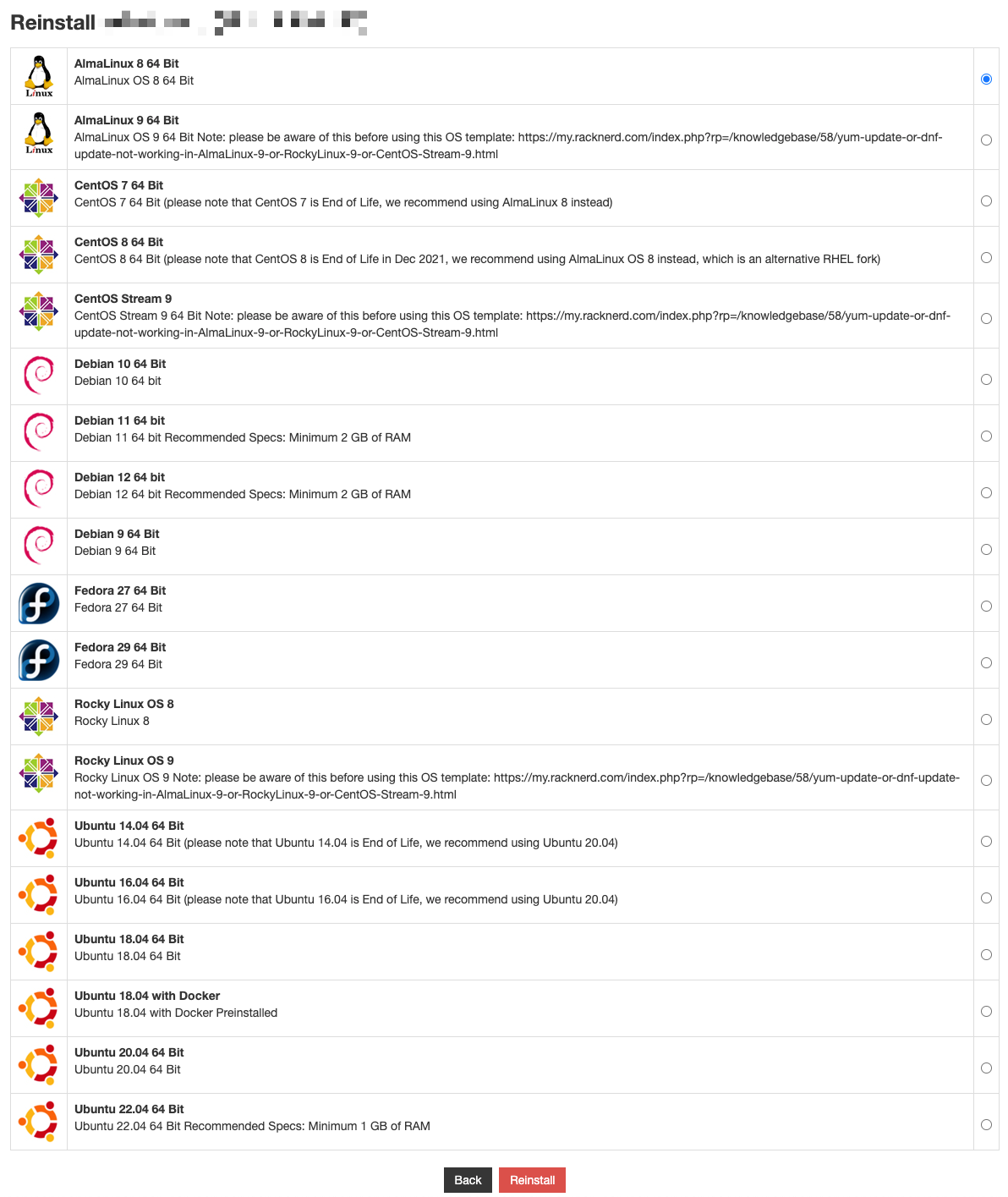

Installing Flatcar turned out to be a small challenge, as I assumed RackNerd wouldn’t offer it in their GUI.

New hope

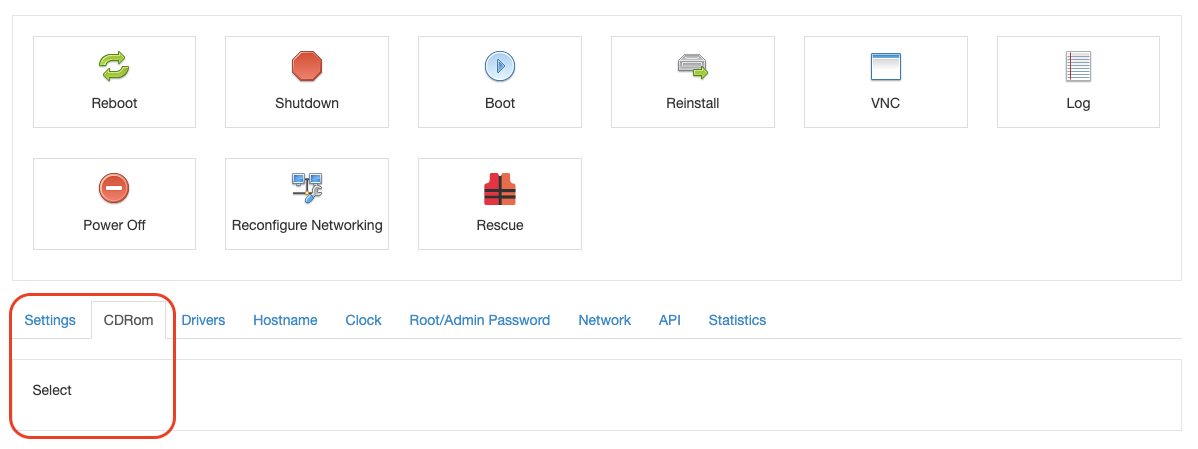

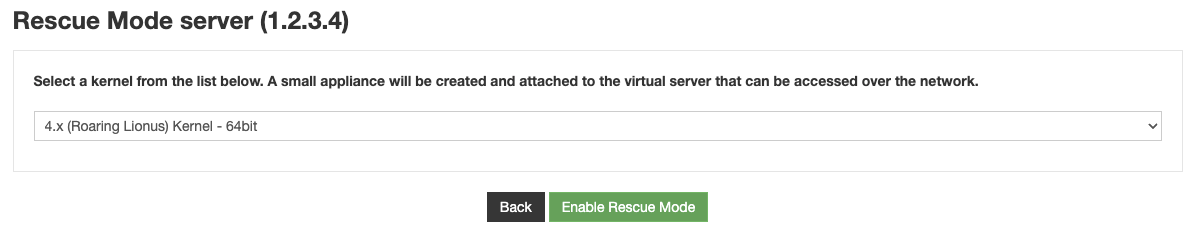

I was certain there had to be a better way to do this—and of course, there was.

Flatcar provides full raw/bin images:

$ export FLATCAR_CHANNEL=stable

$ wget https://${FLATCAR_CHANNEL}.release.flatcar-linux.net/amd64-usr/current/flatcar_production_image.bin.bz2

$ bunzip2 flatcar_production_image.bin.bz2

I downloaded it and tried to put it on my rescue host. Of course I couldn’t really do it, compressed image is around ~500MB, but rescue server had 1GB total, with less than 400MB available.

$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 110M 0 110M 0% /dev

tmpfs 25M 2.9M 22M 12% /run

/dev/vdb1 1.1G 660M 375M 64% /

tmpfs 121M 0 121M 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 121M 0 121M 0% /sys/fs/cgroup

tmpfs 25M 0 25M 0% /run/user/0

But wait! Why do I even bother with downloading that image on rescue OS - I can just burn the image to the VPS disk directly! I checked what was the path of my VPS disk - the unmounted, 10GB one - /dev/vda

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 1024M 0 rom

vda 254:0 0 10G 0 disk

vdb 254:16 0 1.1G 0 disk

└─vdb1 254:17 0 1.1G 0 part /

I ran a command that reads the image file with cat, sends it via ssh, and writes it directly to the disk using dd.

$ cat flatcar_production_image.bin | ssh root@1.2.3.4 "dd of=/dev/vda bs=4M status=progress"

root@1.2.3.4's password: **********

430047232 bytes (430 MB, 410 MiB) copied, 4.00011 s, 108 MB/s

Et voilà!

Now we can check lsblk again, new partitions should be visible now.

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 1024M 0 rom

vda 254:0 0 10G 0 disk

├─vda1 254:1 0 128M 0 part

├─vda2 254:2 0 2M 0 part

├─vda3 254:3 0 1G 0 part

├─vda4 254:4 0 1G 0 part

├─vda6 254:6 0 128M 0 part

├─vda7 254:7 0 64M 0 part

└─vda9 254:9 0 2.1G 0 part

vdb 254:16 0 1.1G 0 disk

└─vdb1 254:17 0 1.1G 0 part /

But it’s not time to celebrate yet - if we rebooted the server now, it would start with Flatcar but without any custom configurations, meaning we wouldn’t even be able to log in via SSH.

With the Flatcar installation script, we can point to a specific ignition config that gets burned into the disk. However, since we already burned a raw image onto the disk, we need to handle this differently.

Looking at partitions I assumed /dev/vda6 is likely the oem one.

├─vda6 254:6 0 128M 0 part

So I mounted it.

$ mount /dev/vda6 /mnt/

I made a simple butane config with my ssh key like this:

variant: flatcar

version: 1.1.0

passwd:

users:

- name: core

ssh_authorized_keys:

- ssh-ed25519 AAAA... me@0qa.cc

and then using butane utility changed it into ignition config (you can skip this step and just create json config yourself)

butane -s -p config.bu > config.ign

My config config.ign looked like this:

{

"ignition": {

"version": "3.4.0"

},

"passwd": {

"users": [

{

"name": "core",

"sshAuthorizedKeys": [

"ssh-ed25519 AAAA... me@0qa.cc"

]

}

]

}

}

I copied it to the server

$ ls -l /mnt/

total 4

-rw-r--r-- 1 root root 313 Jul 12 12:28 config.ign

I’ve disabled Rescue mode, machine rebooted and…

ssh core@1.2.3.4

Flatcar Container Linux by Kinvolk stable 4230.2.1

It worked! Now I could generate additional ignition configs, reprovision, and start running some workloads.

For text clarity I ommited all of the issues with possible host key incorrectness - which will happen if we switch between VPS OS and rescue OS and then reinstalled VPS OS. If we don’t care about keeping old host keys we can remove specific ones by just doing

ssh-keygen -R "1.2.3.4"